When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Ready to get started?

What is a Large Language Model (LLM)?

LLMs can perform an impressive range of tasks without requiring additional specialized training.

They excel at understanding complex queries and can generate coherent, contextually appropriate outputs in multiple formats and languages.

The versatility of LLMs extends beyond simple text processing.

Their ability to learn from context makes them particularly valuable for both personal and professional use.

Why run an LLM locally?

Running LLMs locally provides enhanced privacy and security, as sensitive data never leaves your gadget.

This is particularly crucial for businesses handling confidential information or individuals concerned about data privacy.

Local deployment offers significantly reduced latency compared to cloud-based solutions.

Cost efficiency is another major advantage of local LLM deployment.

and have at least 16GB of RAM.

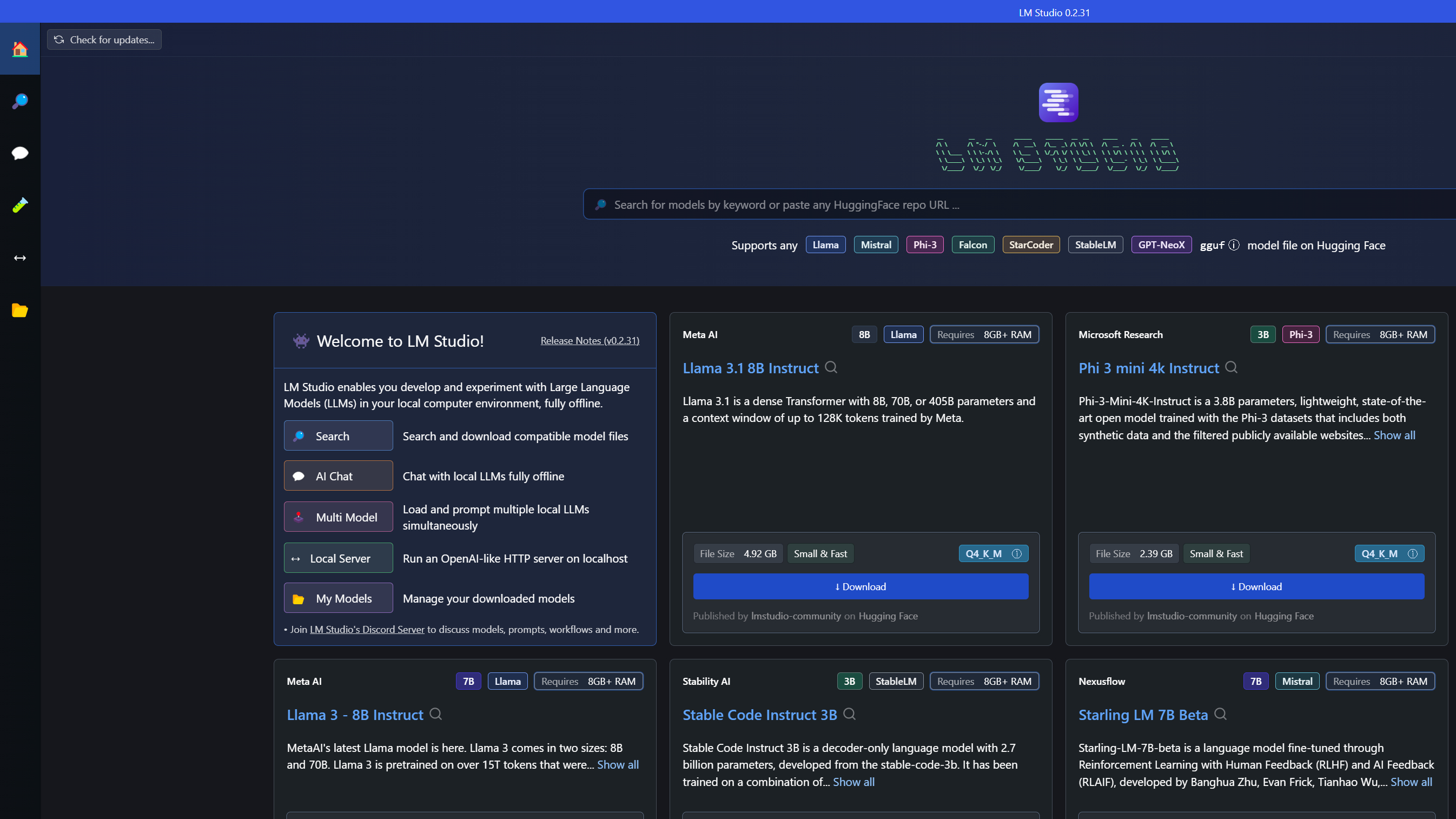

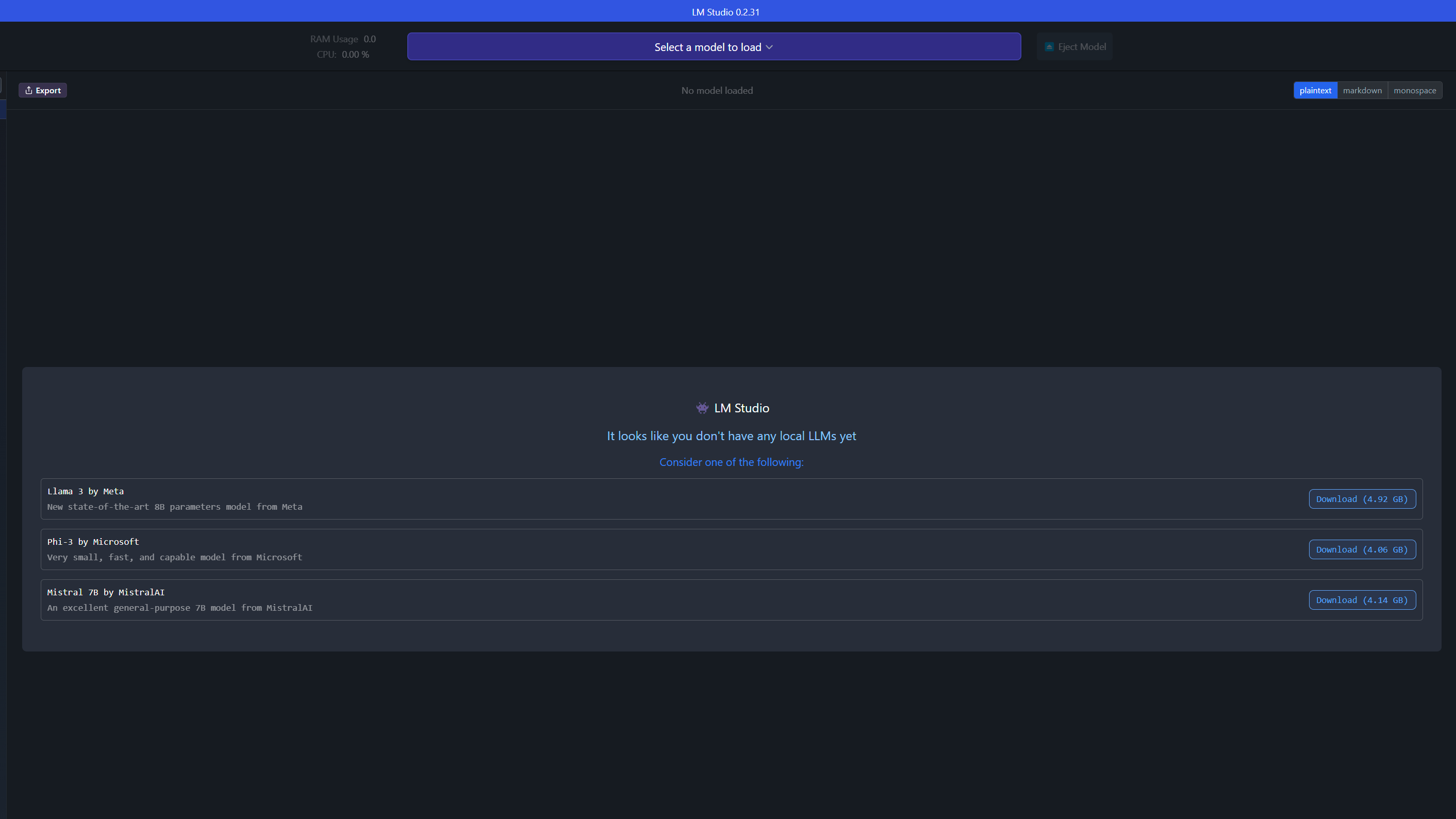

LM Studio

LM Studiois one of the easiest tools for running LLMs locally on Windows.

Start by downloading the LM Studio installer from their website (around 400MB).

Once installed, pop kick open the app and use the built-in model web client to explore available options.

When you’ve chosen a model, tap the magnifying glass icon to view details and download it.

After downloading, poke the speech bubble icon on the left to load the model.

To improve performance, enable GPU acceleration using the toggle on the right.

This will significantly speed up response times if your PC has a compatible graphics card.

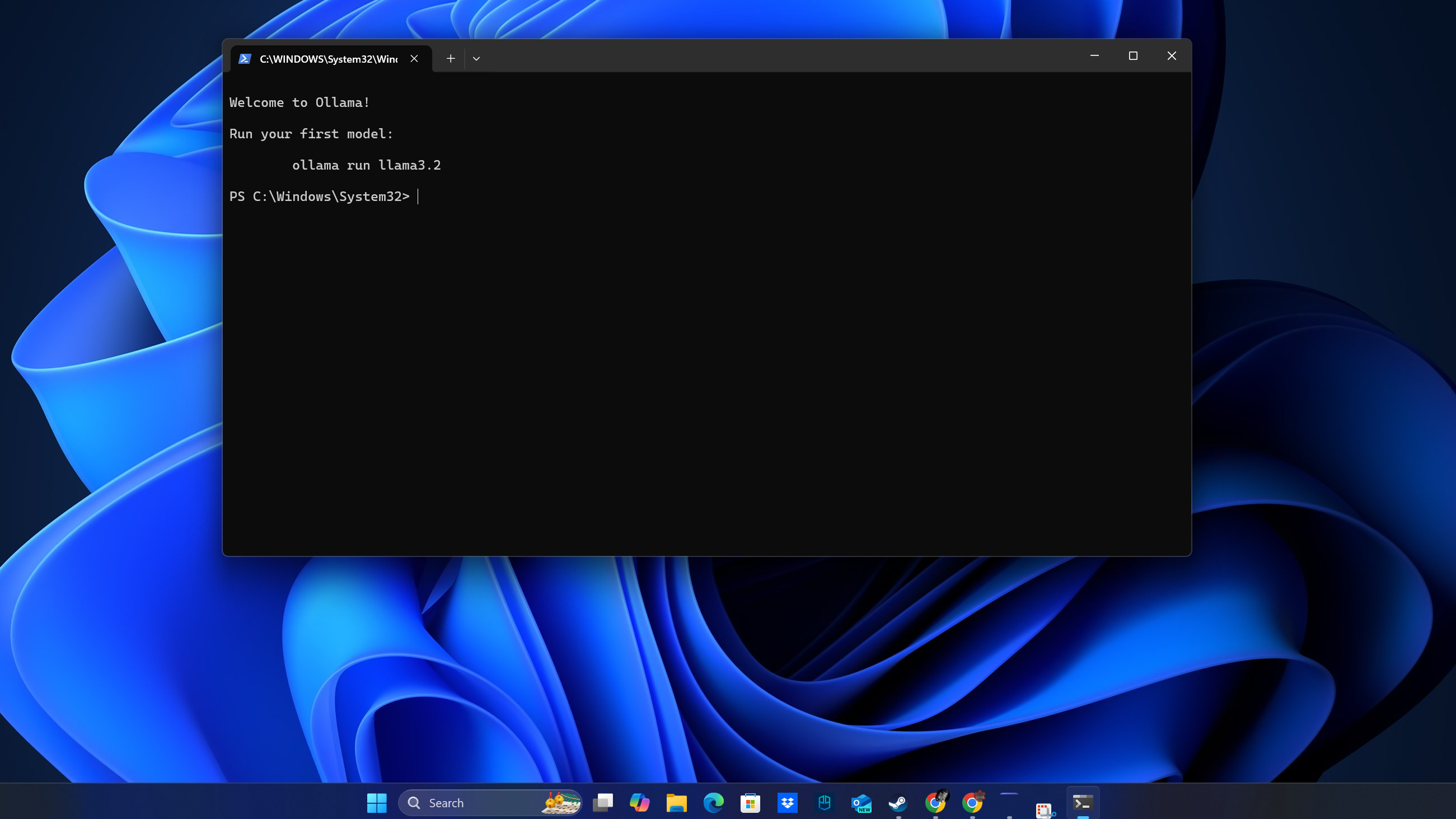

Ollama

Ollamais another excellent option for running LLMs locally.

Start by downloading the Windows installer from ollama.com.

To choose a model, visit the Models section on Ollama’s website.

The model will automatically download and set up for local use.

Ollama supports multiple models and makes managing them easy.

Homebrew

The simplest way to get started on Mac is throughHomebrew.

This command sets up the basic framework needed to run local language models.

After installation, you’re free to enhance functionality by adding plugins for specific models.

For example, installing the gpt4all plugin provides access to additional local models from the GPT4All ecosystem.

This modular approach allows you to customize your setup based on your needs.

LM Studio provides a native Mac software optimized for Apple Silicon.

Download the Mac version from the official website and follow the installation prompts.

The software is designed to take full advantage of the Neural Engine in M1/M2/M3 chips.

Once installed, launch LM Studio and use the model web app to download your preferred language model.

The interface is intuitive and similar to the Windows version, but with optimizations for macOS.

Enable hardware acceleration to leverage the full potential of your Mac’s processing capabilities.

Closing thoughts

Running LLMs locally requires careful consideration of your hardware capabilities.

Memory is the primary limiting factor when running LLMs locally.

You’ll need macOS 13.6 or newer for optimal compatibility.

These tools provide valuable features for error detection, output formatting, and logging capabilities.

More from Tom’s Guide