When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Since its launch, Grok has undergone rapid development, positioning itself alongside established AI models from companies likeOpenAIandGoogle.

The name aligns with xAIs mission of advancing AIs understanding of the universe.

xAI was established with a stated mission to understand the true nature of the universe.

Despite being introduced as an early-stage product, Grok demonstrated rapid iteration.

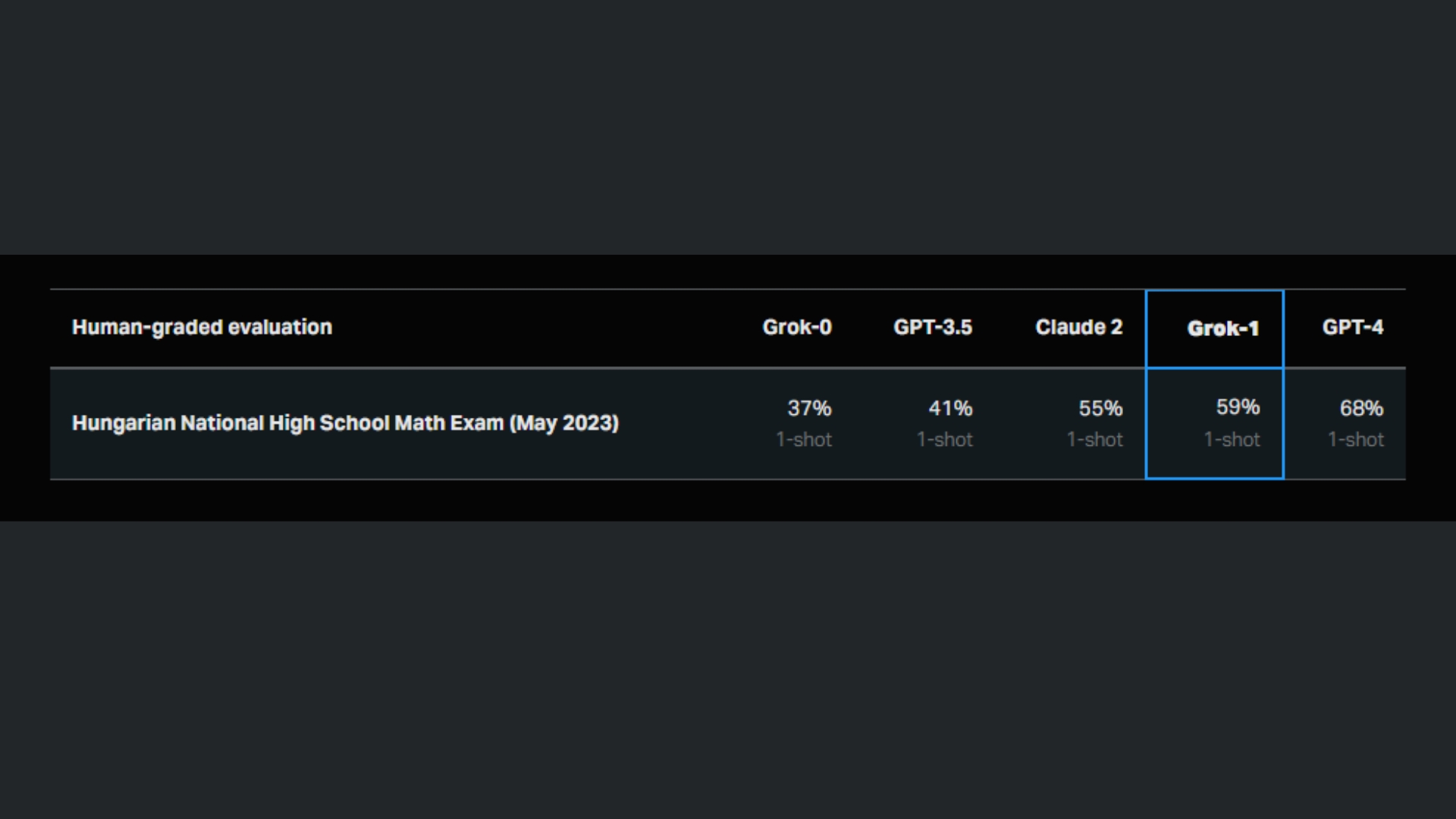

Grok-1

In October 2023, xAI introduced Grok-1, a 314-billion-parameter Mixture-of-Experts (MoE) model.

This improvement enabled more coherent long-form responses and better handling of complex, multi-step reasoning tasks.

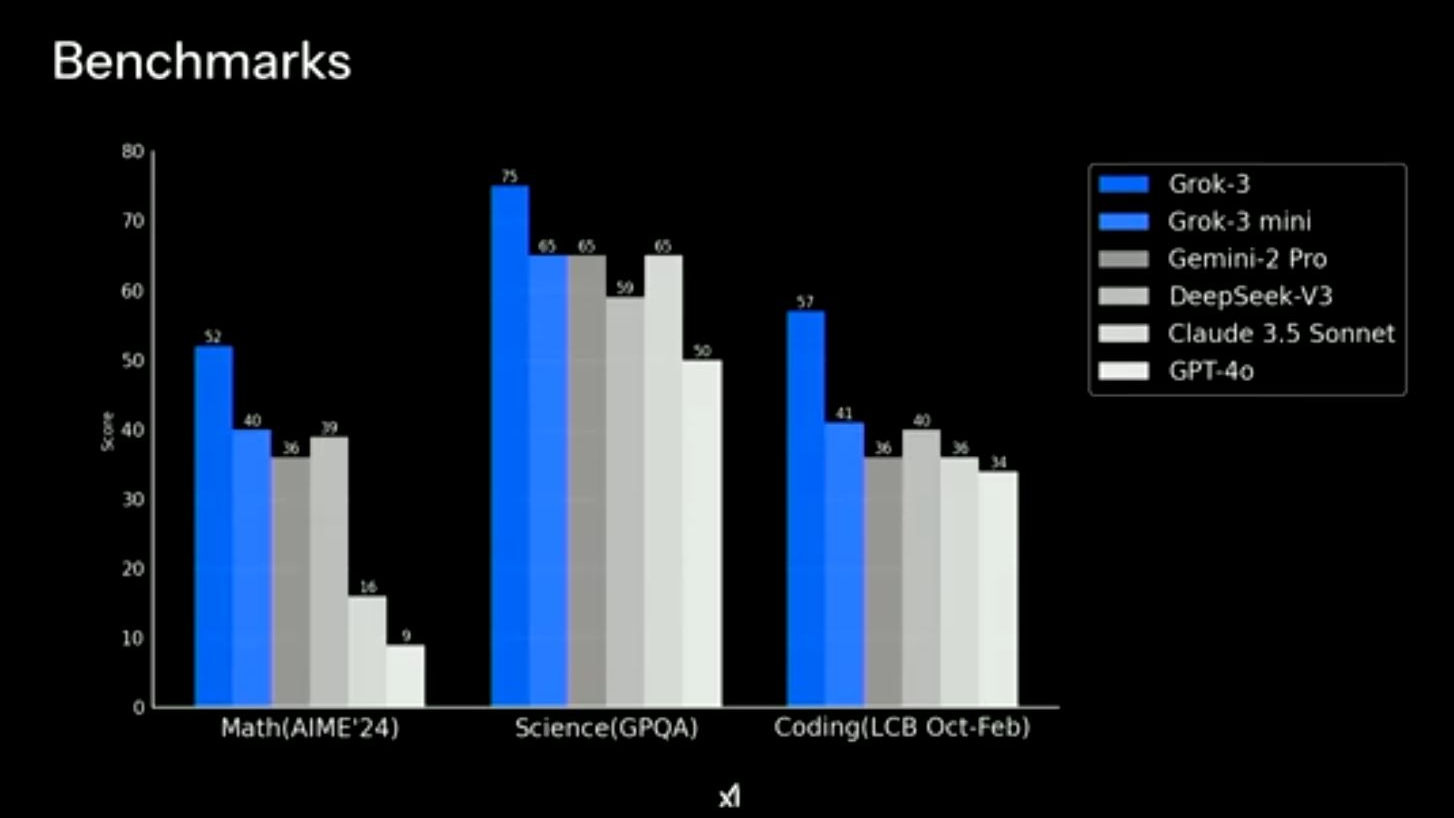

Benchmarks indicated that Grok-2 outperformed competitors such asClaude 3.5 SonnetandGPT-4 Turboin several reasoning and coding tasks.

Grok-2 mini was optimized for efficiency, balancing speed and accuracy for general-purpose use.

Further updates in December 2024 enhanced Grok-2s processing speed and accuracy while expanding its multilingual capabilities.

The MoE architecture remains a key differentiator, allowing xAI to optimize performance without significantly increasing computational costs.

Training data sources include publicly available web content, X posts, and other structured datasets.

However, as with any AI model, challenges remain.

xAI continues to refine its approach, with plans for future iterations and additional open-source releases.

xAI has also explored AI applications in gaming and other interactive domains.