When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Both models are hallucinating.

This in itself isnt out of the ordinary, as most AI models still tend to do this.

But these two new versions seem to be hallucinating more than a number of OpenAIs older models.

Historically, while most new models continue to hallucinate, the risk has reduced with each new release.

The potentially larger issue here is that OpenAI doesnt know why this has happened.

What are hallucinations?

If youve used an AI model, youve most likely seen it hallucinate.

This is when the model produces incorrect or misleading results.

If youve used an AI model, youve most likely seen it hallucinate.

This is when the model produces incorrect or misleading results.

This can be a small, non-important issue.

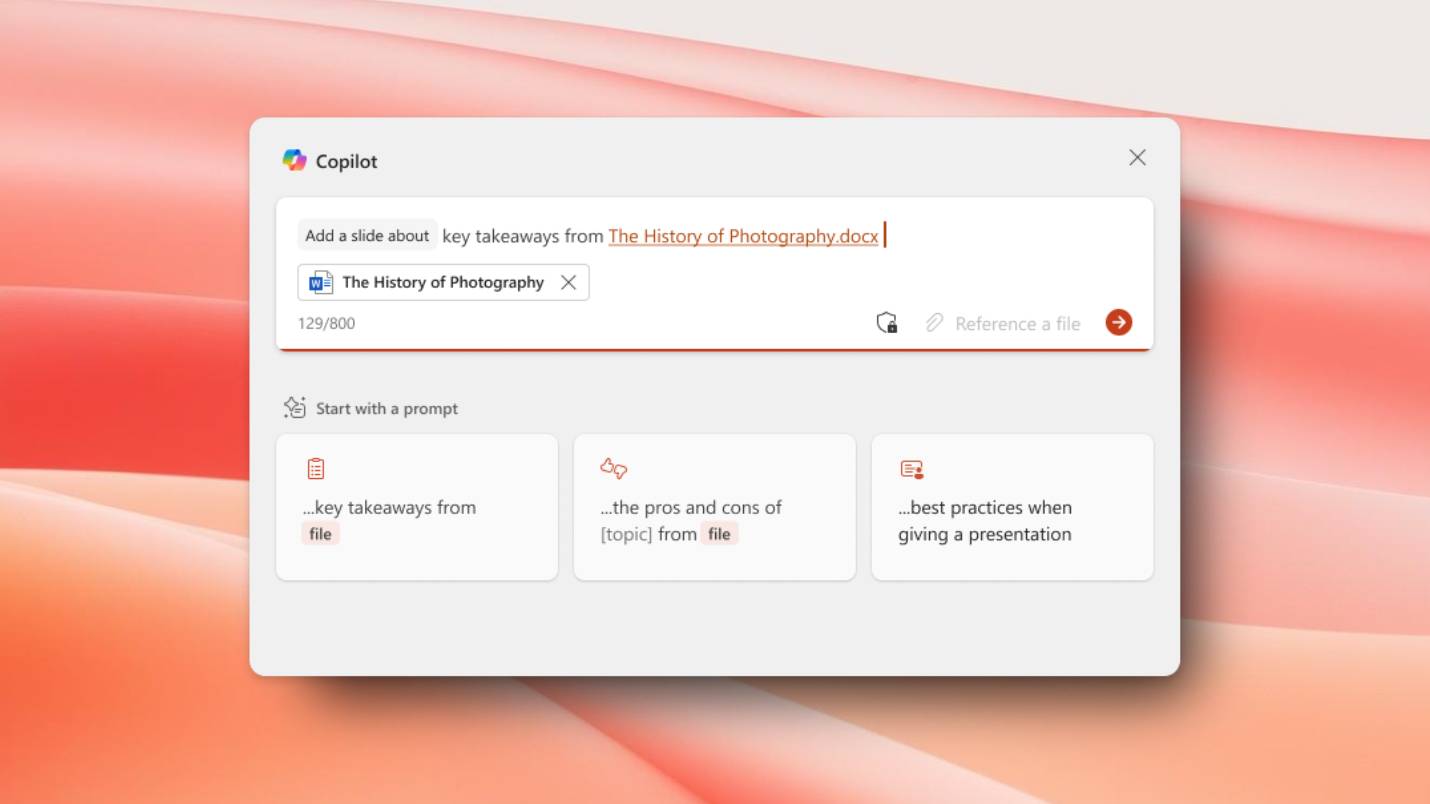

What does this mean for the o3 and o4-mini models?

This is expected, as smaller models have less world knowledge and tend to hallucinate more.

However, we also observed some performance differences comparing o1 and o3, the report states.

More research is needed to understand the cause of this result.

OpenAIs report found that o3 hallucinated in response to 33% of questions.

That is roughly double the hallucination rate of OpenAIs previous reasoning models.

However, as both models are set up for more complex tasks, this could be problematic going forward.

As mentioned above, hallucinations can be a funny quirk in non-important prompts.