When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Typical AI modelsdemand large computers with powerful processors and extreme amounts of memory.

More importantly, they can also be installed and run by non-technical users.

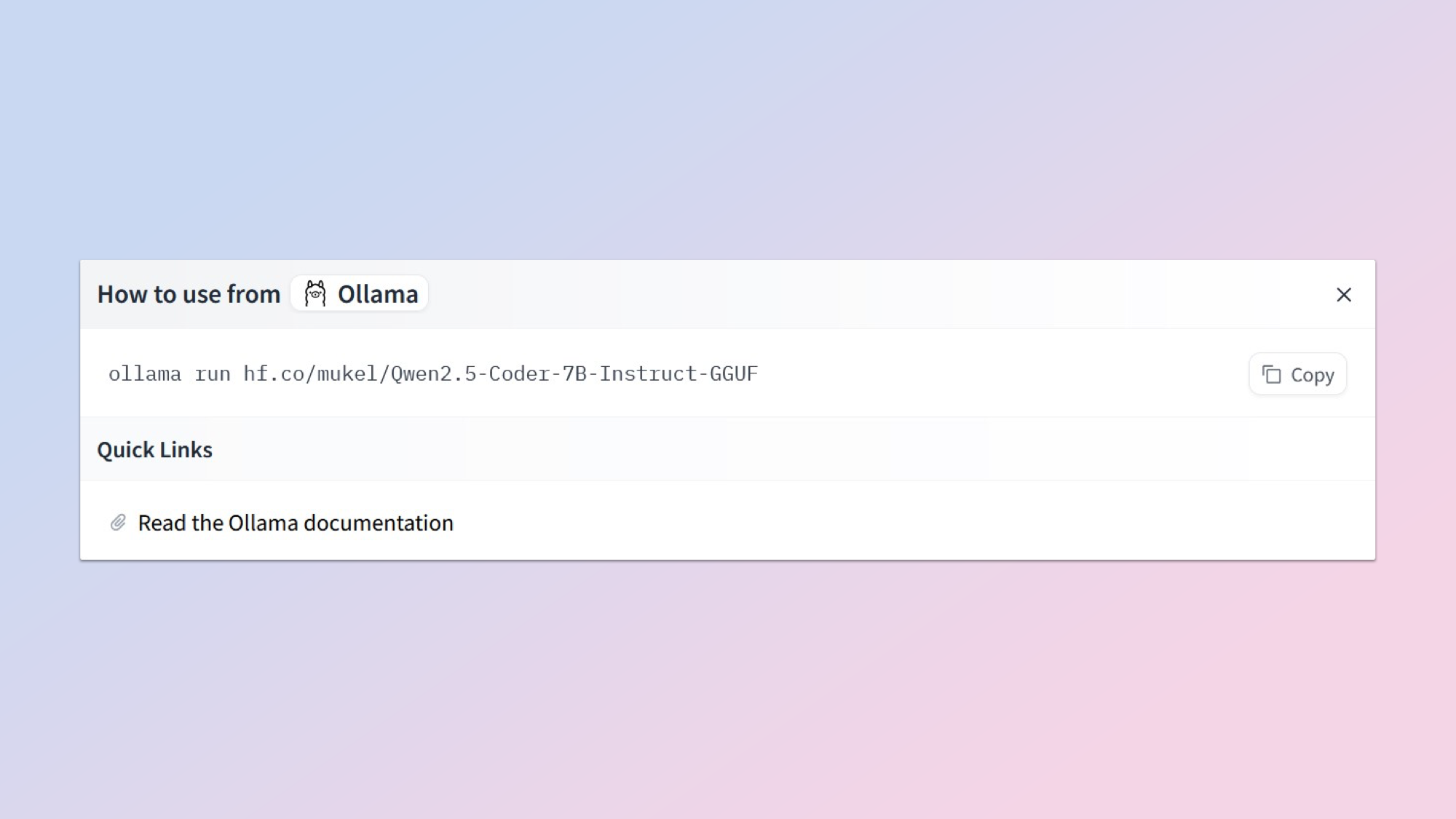

Previously, models had to first be made availablethrough the Ollama libraryto run and download on your laptop.

This makes the whole process easier and HuggingFace says it will work to simplify things even further.

Why is the GGUF format so important?

you’re able to now run models on Hugging Face with Ollama.

Let’s go open-source and Ollama!

How to use GGUF models with Ollama?

This will pop up a window with theURL address of the model for copying.

On Windows go to the search bar,enter in cmdandhit the enter key.

When the terminal window appears,paste in the URL you just copied(ctrl-V)andpress enter again.

At this point, Ollama will automatically download the model ready for use.

Quick, easy and painless.

The process on a Mac is more or less the same, just replace cmd with Terminal.

It should be noted that these GGUF files can also be run using a growing variety of user clients.

Some of the most popular includeJan,LMStudioandMsty.

The format is also supported by theOpenWebUIchat program.

And the performance is getting better all the time.

Its good to see that open source continues to thrive, even when up against venture capital-backed megacorps.

More from Tom’s Guide