When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

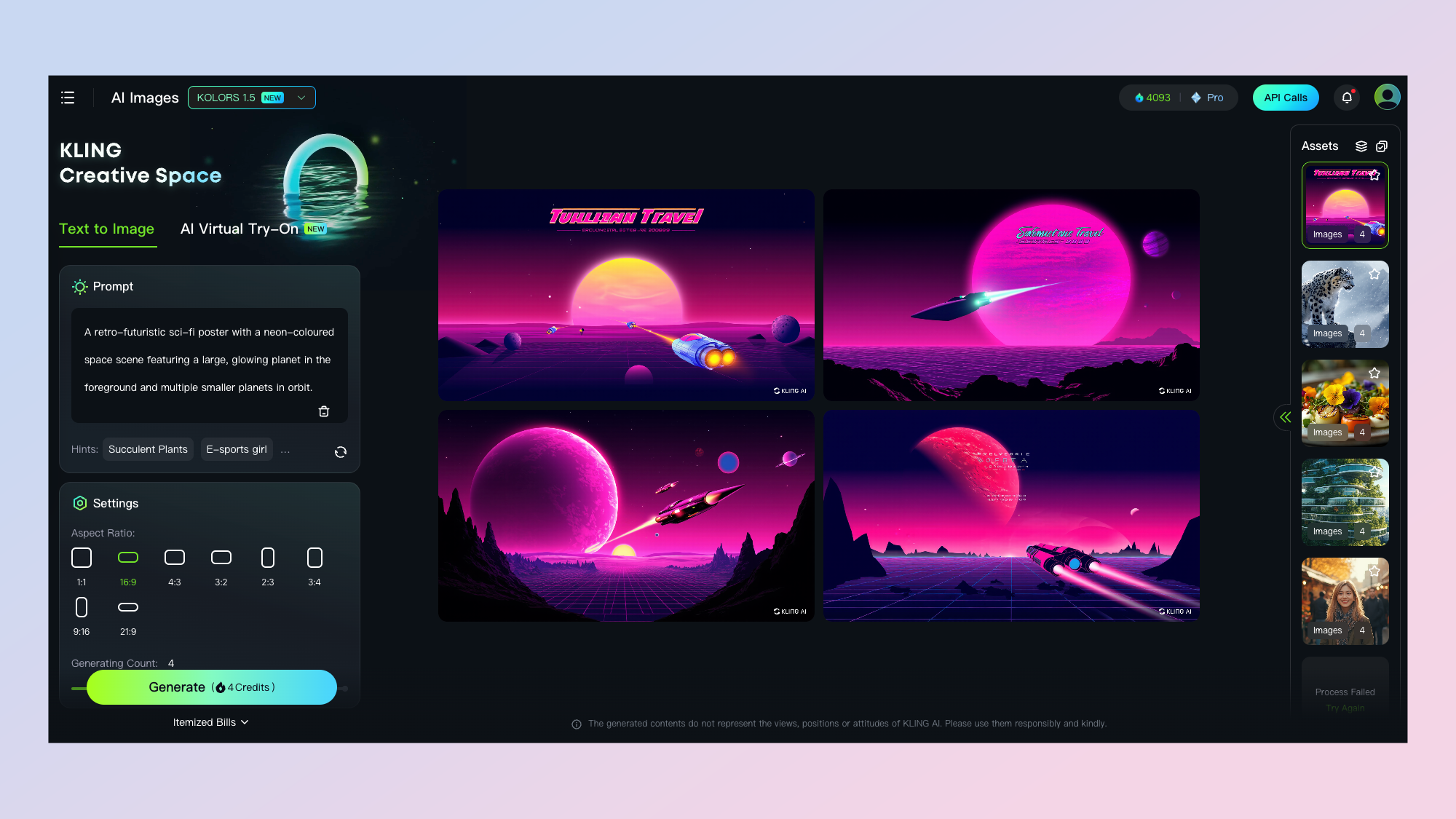

For this story, Ive focused on platforms working in the AI video space, rather than avatar creation.

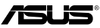

Kling and Runway are the most similar, offering full video creation platforms with lip-sync as a feature.

So Ive picked those three for this test.

(I’ll explain how many rounds I ended up running at the end.)

Ive focused on 10-second snippets even though Hedra can go up to a minute.

This is to keep consistency across all three models.

Hedra works slightly differently to Kling and Runway.

The final results are similar.

Round 1: The Static Face Test

This should be the easiest.

The background is a soft, blurred color gradient with no distractions.

Skin tones should be natural, and the character should look calm, with no notable emotion.

I dont really exist but can still speak to you thanks to the wonders of lip-synching".

Runway, thanks to Turbo is near real-time and Hedra is animating an image, so it’s quick.

I wasn’t convinced by the flickering, so I’mgiving it to Hedraon this occasion.

The lighting is bright and warm, creating a cheerful and energetic mood.

The background is a soft, light pastel colour that doesn’t distract from the facial expression.

Something to smile about.

This will test the ability to capture emotional context.

All three were nightmarish renders.

It is clear that if you want a good lip-sync, you should start with a closed mouth.

I can’t crown a winner, but I will reluctantlygive it Hedrafor the least horrific mouth movement.

The face shows determination and focus.

Never heard from him again.

None of them were perfect but I think Hedra and Runway did a better job than Kling.

Overall, I thinkRunway took this roundfor the most realistic lip-sync.

Hedra’s Character-2 came out on top and to some inevitable degree.

If I were to repeat this experiment, I’d use external sounds to.

create more consistency, always use generated images and carry out a wider range of tests.

I just wish Kling were quicker.

More from Tom’s Guide