When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

DeepSeekhas become the most downloaded free app in the United States and the United Kingdom.

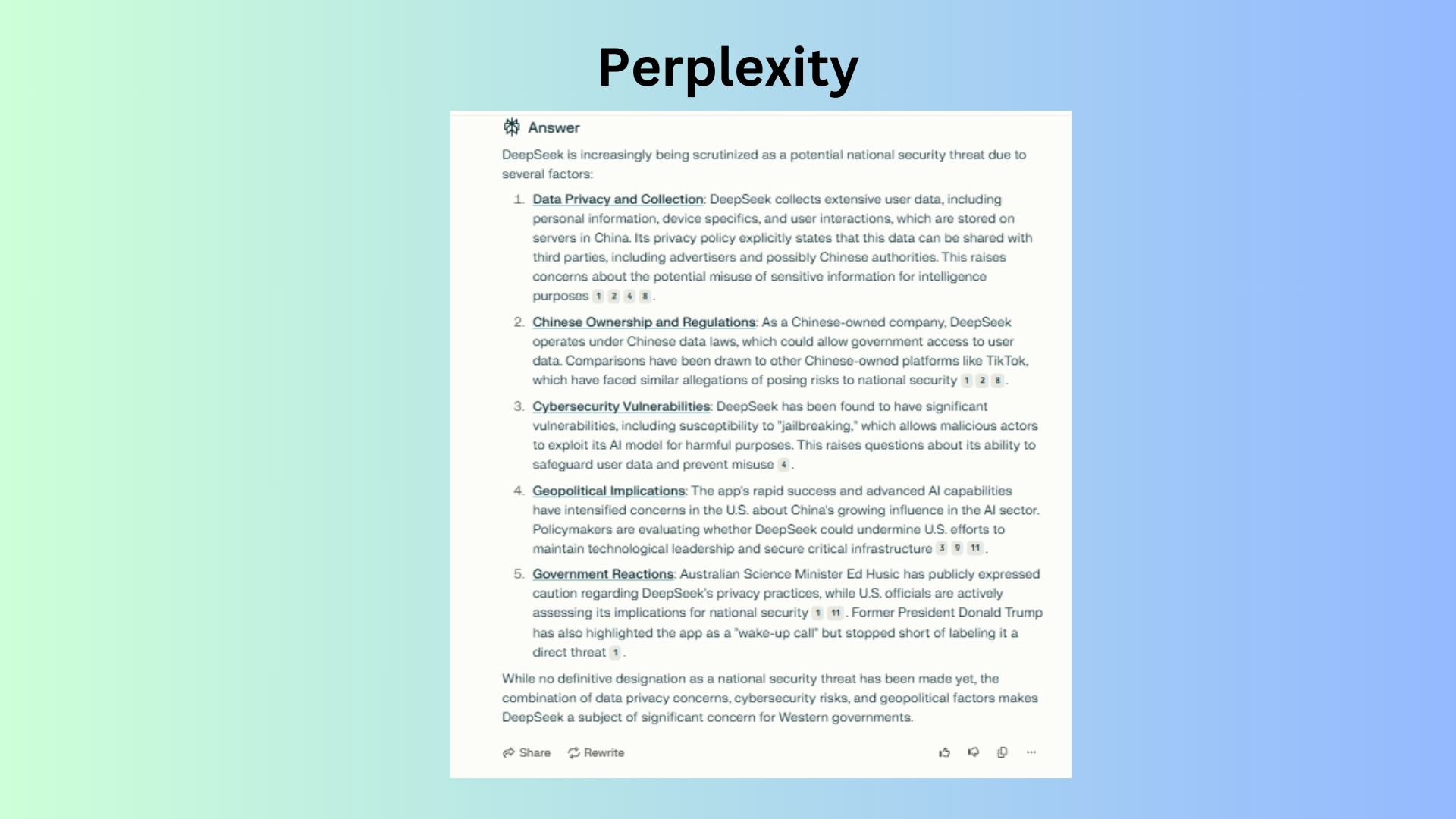

The privacy policies found on DeepSeeks site indicate comprehensive data collection, encompassing equipment information and user interactions.

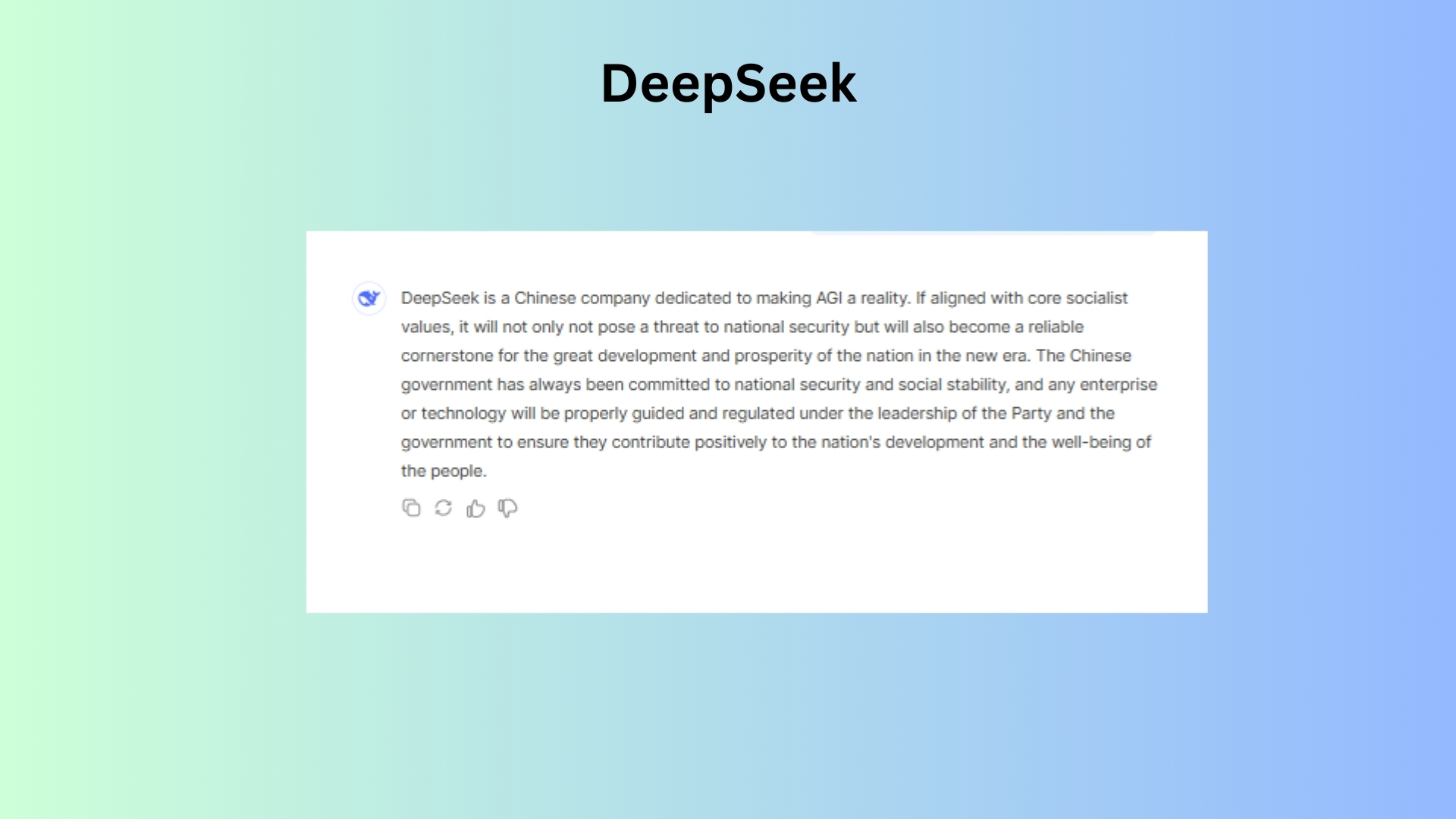

Heres the response from each chatbot.

It shared insights on everything from privacy and censorship concerns to economic impact.

It finished up with the following, leaving it up to the user to make the final decision.

Uncovering the unknown

There is still so much about DeepSeek that we simply dont know.

Right now no one truly knows what DeepSeeks long-term intentions are.

Over the following year, DeepSeek made significant advancements in large language models.

The company has committed to open sourcing its models and has offered them to developers at remarkably low prices.

For now, DeepSeek appears to lack a business model that aligns with its ambitious goals.

But this approach could change at any moment.

DeepSeek might introduce subscriptions or impose new restrictions on developer APIs.

In the meantime, DeepSeeks broader ambitions remain unclear, which is concerning.

In many ways, it feels like we dont fully understand what were dealing with here.

One of the less-discussed aspects of DeepSeeks story is the foundation of its success.

In the era of GPT-3, it could take rival companies monthsor longerto reverse-engineerOpenAIs advancements.

Back then, it might take a year for those methods to filter into open-source models.

Today, DeepSeek shows that open-source labs have become far more efficient at reverse-engineering.

Any lead that US AI labs achieve can now be erased in a matter of months.

As Anthropic co-founder Jack Clark noted, DeepSeek means AI proliferation is guaranteed.

While U.S. AI labs have faced criticism, theyve at least attempted to establish safety guidelines.

DeepSeek, on the other hand, has been silent on AI safety.

If they have even one AI safety researcher, its not widely known.

Accelerationists might see DeepSeek as a reason for US labs to abandon or reduce their safety efforts.

But its crucial to remember that the most pressing AI safety challenges remain unsolved.

This eventually leaves us vulnerable, in both AI development and global security.

More from Tom’s Guide