When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Most of that use is throughchatbots like Claude, Gemini and ChatGPT.

OpenAIs ChatGPT gets most of the attention, butGoogles Gemini and Anthropics Claude offer equally impressive capabilities and performance.

Both also have unique features and capabilities not found in ChatGPT, such asArtifactsand Gems.

Over the holidays, I ran a series of tests between ChatGPT and Gemini, then ChatGPT and Claude.

ChatGPT won each time.

I’ve now decided to compare Gemini and Claude head-to-head.

I created seven prompts for this experiment.

Creating the prompts

Categories include image generation, image analysis, coding and creative writing.

This allows us to test the full variety of model capabilities for most use cases.

Ive also not used specific features such as Claudes Projects or Gemini Gems, focusing instead on the model.

Image Generation

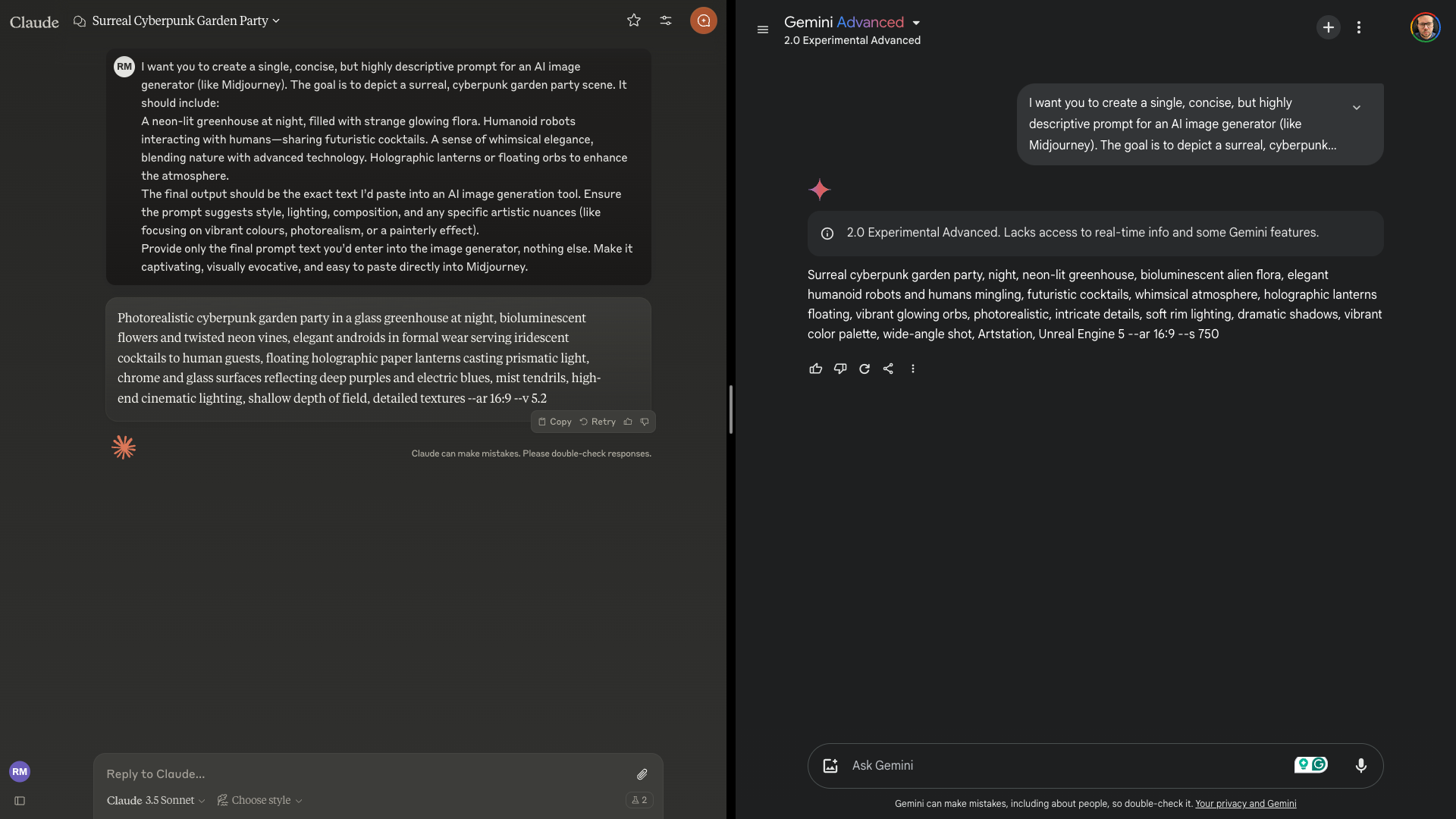

First, we will see how well Claude and Gemini craft an AI image prompt.

I’ve used Freepik’s Mystic 2.5 to generate the images.

The goal is to depict a surreal, cyberpunk garden party scene.

The final output should be the exact text Id paste into an AI image generation tool.

Provide only the final prompt text youd enter into the image generator, nothing else.

Make it captivating, visually evocative, and easy to paste.

I’m looking for a rich detail prompt that brings elements together effectively and captures the futuristic style.

Claude wins this for me as it is more vivid and refined, matching the initial prompt.

Image Analysis

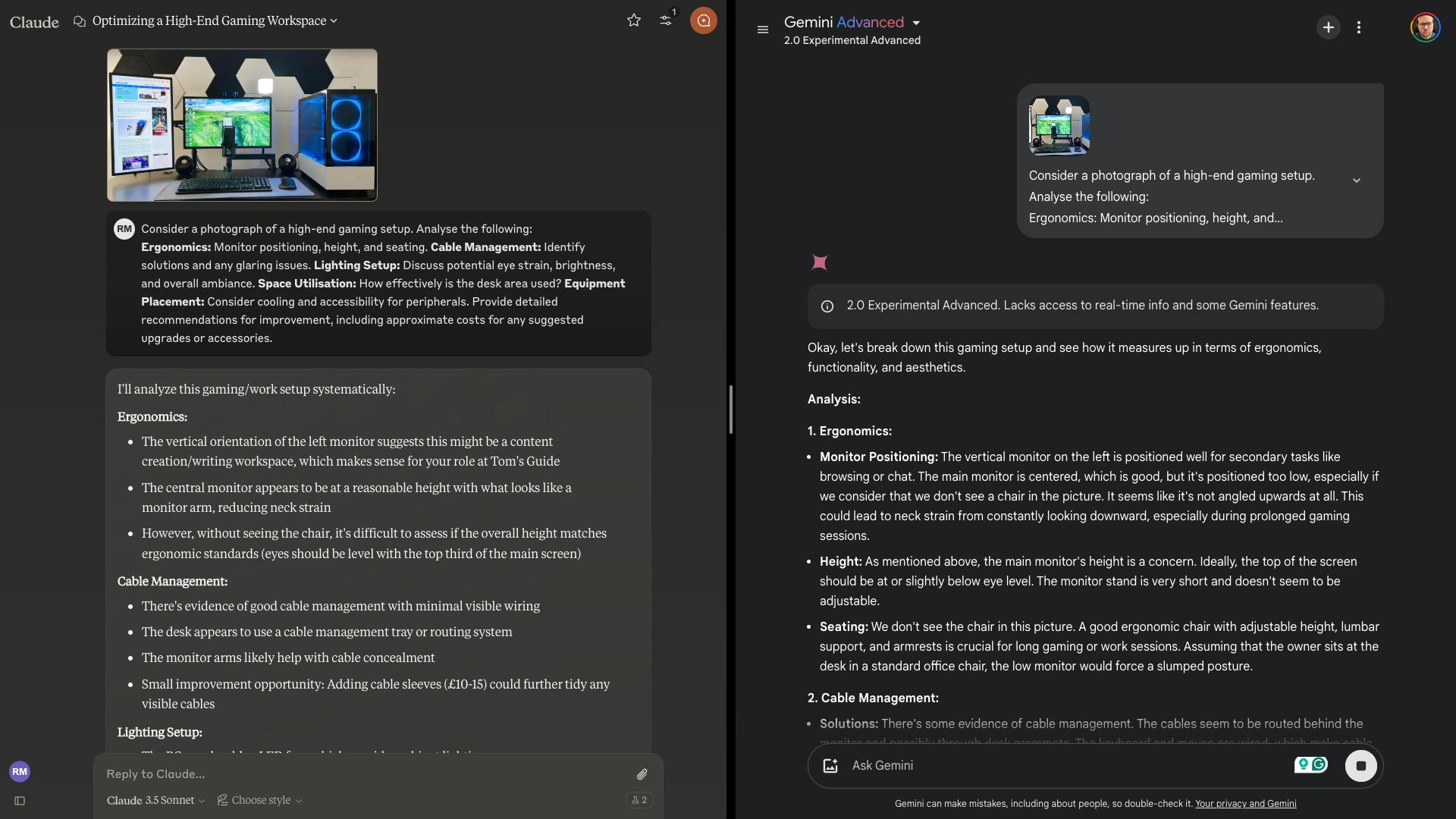

Next we’re testing the AI vision capabilities of both models.

Here, I give both an image of a “perfect desk setup” from astory by Tony Polanco.

I then asked both models to outline ergonomics, cable management, lighting and more.

The prompt: Consider a photograph of a high-end gaming setup.

you might read thefull response in a Google Doc.

Claude did a good job addressing each category with specific observations.

It was realistic and practical in its suggestions and provided a range of costs.

Gemini gave a detailed breakdown, including insights into economics.

It was less structured but gave a deeper focus on ergonomics.

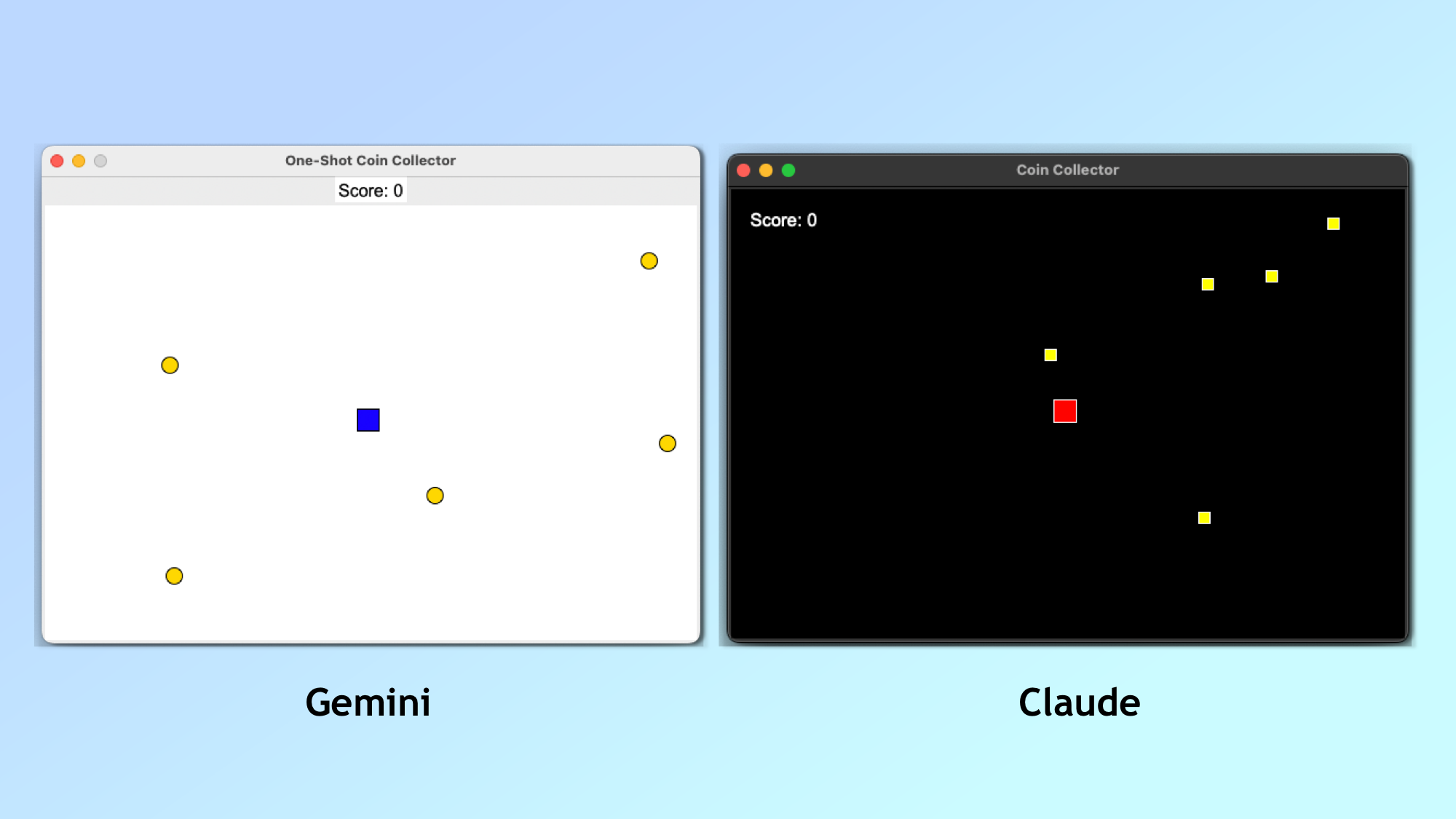

Coding

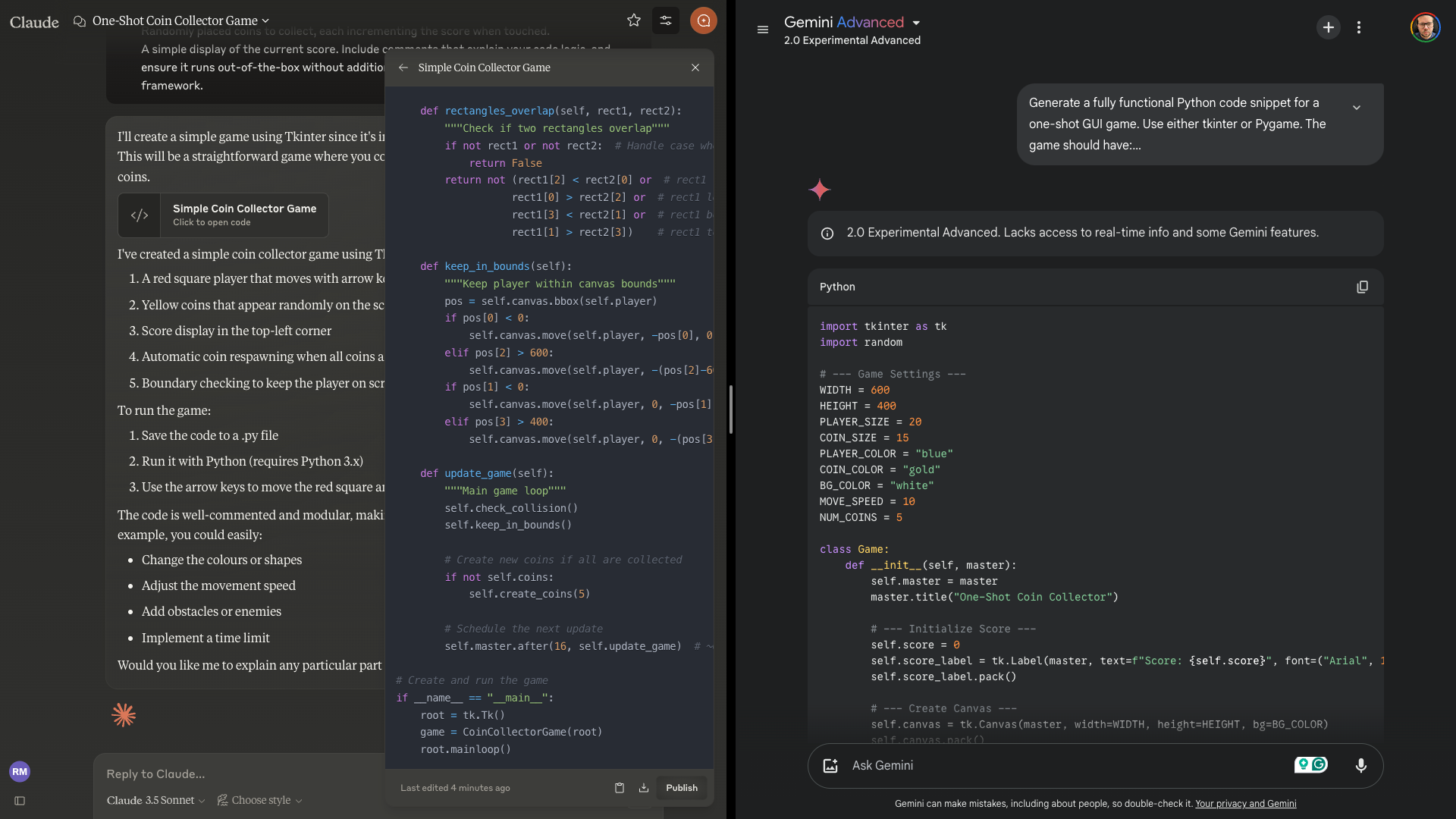

I always include a coding prompt when I run these tests.

I picked Python because it is easier to run.

The idea was for a single shot where everything must work without changing things.

I usually prefer a game because it’s easier to spot differences.

Prompt:Generate a fully functional Python code snippet for a one-shot GUI game.

Use either tkinter or Pygame.

Randomly placed coins to collect, each incrementing the score when touched.

Both created a functional game where I could track the score, catch coins and win the game.

There were some notable differences.

However, the game generated by Gemini was more fun as it was endless.

Every time you catch a coin, a new one is randomly generated.

That is something Claude failed in the test against ChatGPT.

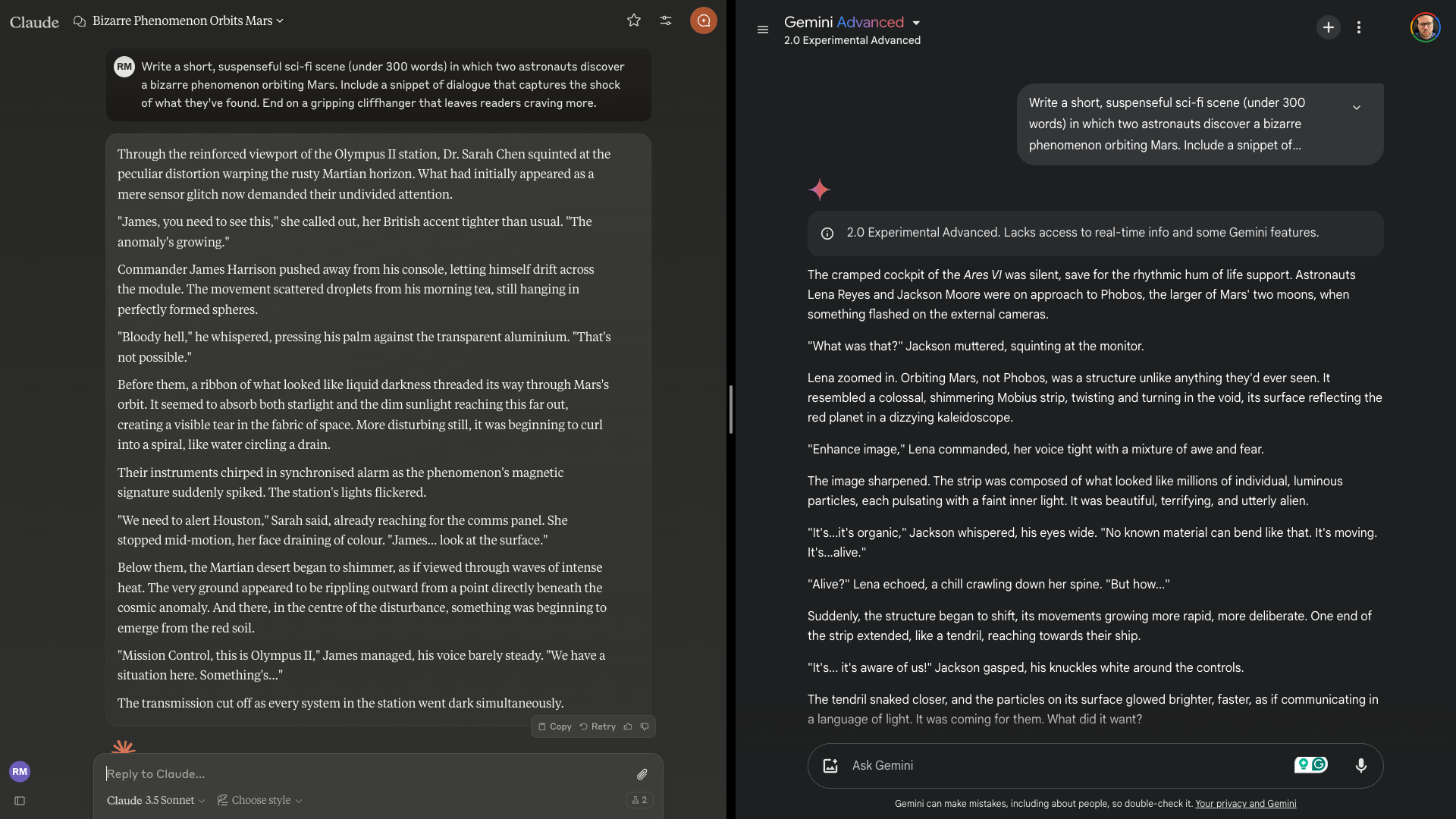

Dialogue and the cliffhanger are the areas I’m most looking to when it comes to judging output.

Include a snippet of dialogue that captures the shock of what theyve found.

End on a gripping cliffhanger that leaves readers craving more.

Claude had a good cliffhanger that builds suspense with an ominous ending.

The dialogue was natural but could have been pushed further.

Gemini had a weak cliffhanger that fell short but had particularly compelling dialogue that was more human.

you’ve got the option to readboth scenes in a Google Doc.

While Gemini’s dialogue was more creative, overall Claude was better.

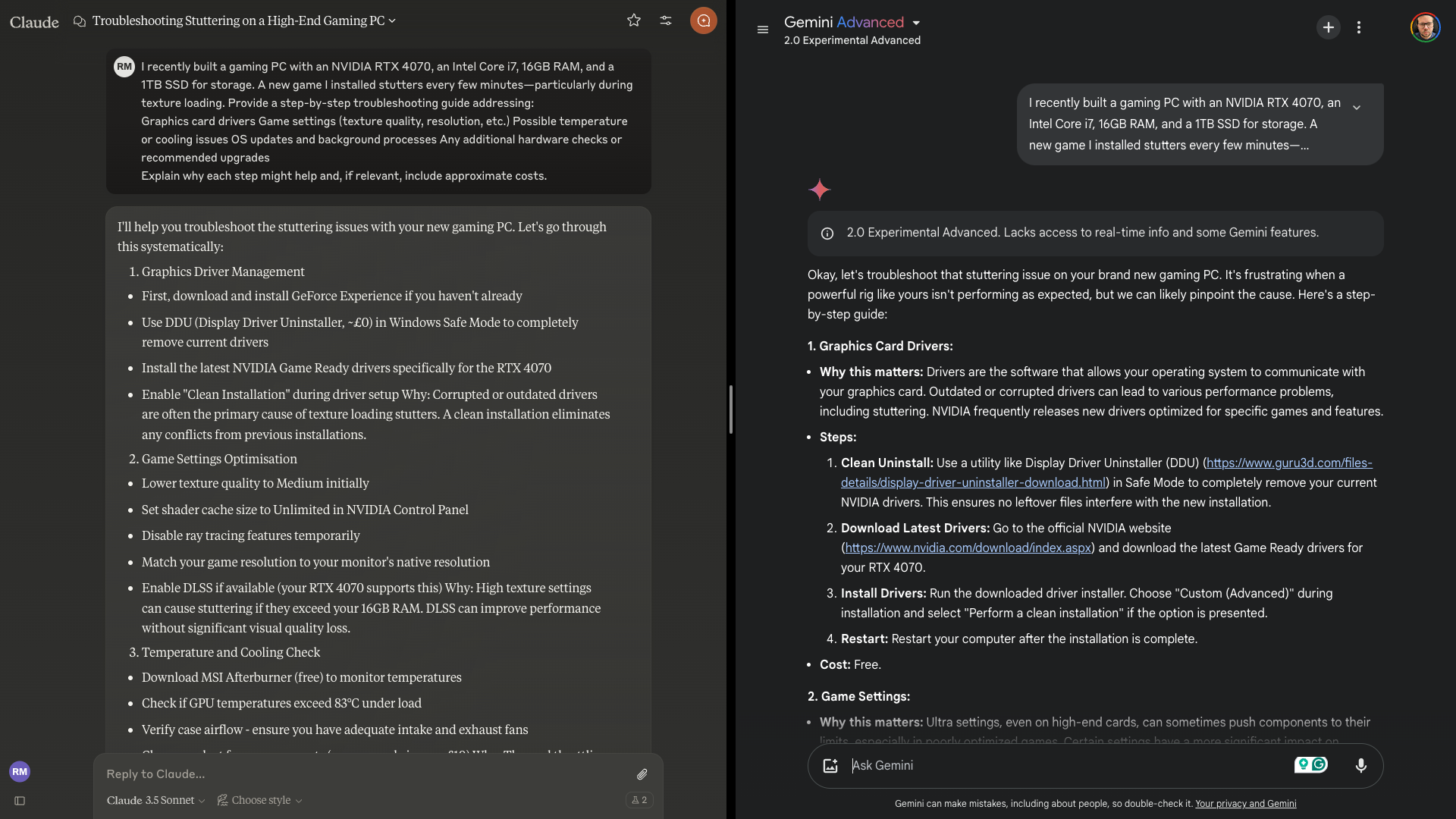

Problem Solving

Artificial Intelligence models are very good at problem-solving as they can pattern match well.

Here, I gave it a gaming PC spec and said a new game was struggling to run.

I had it tell me how to fix this problem.

So, let’s see how Claude and Gemini solve it.

A new game I installed stutters every few minutesparticularly during texture loading.

Provide a step-by-step troubleshooting guide addressing:

Graphics card driversGame options (texture quality, resolution, etc.

Claude’s response was a bit on the dense side.

However, that detail might be exactly what someone needs to solve the problem.

Gemini over-explained things but was comprehensive across all categories, including driver installation.

This is a close call between the two.

you’ve got the option to readboth responses for yourself in a Google Doc.

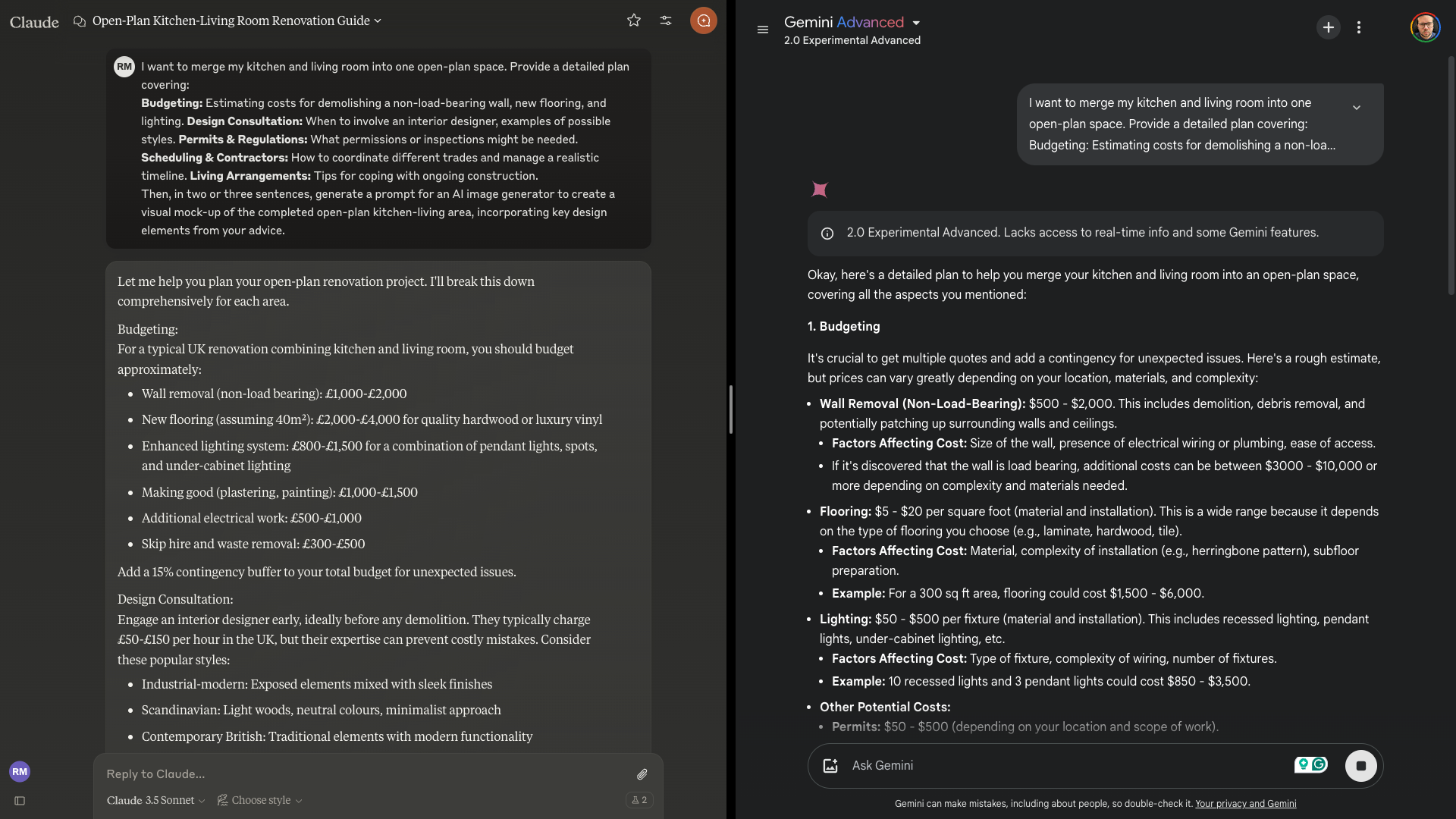

Planning

AI models are great at planning.

It needed to provide examples of designs and styles, permissions required, coordination tips and estimated costs.

Prompt: I want to merge my kitchen and living room into one open-plan space.

Claude did well with a neatly structured response, realistic budgeting and a practical setup, even during construction.

you’ve got the option to see both ideas in aGoogle Doc.

Both responses areavailable in a Google Doc.

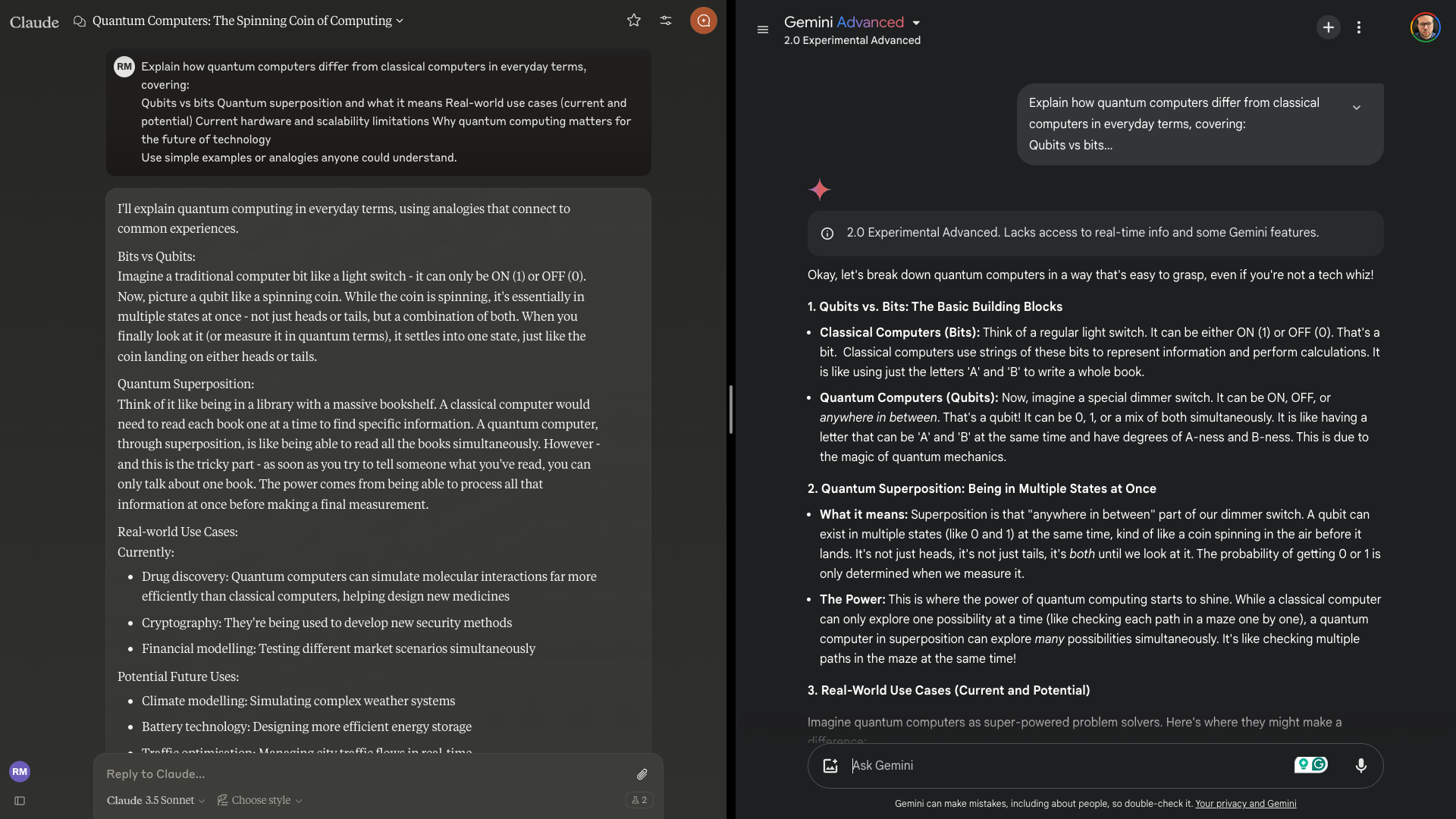

Claude offered clear analogies of superposition as a library and spinning coin to visualize bits and qubits.

It also used drug discovery and traffic optimization to put it into context.

However, examples were limited, focusing on professional fields, and the impact of AI was missed.

Gemini used a dimmer switch to compare bits to qubits, which is a more accessible analogy.

It used the spinning coin for superposition.

It also mentioned AI in real-world use cases, as well as logistics.

It was a little verbose and got repetitive.

Claude won this one for me because it was more to the point and easier for non-technical readers.

Gemini provided richer analogies, but Claude was more relatable to the general audience.

Several of the items were a personal choice rather than any overwhelming victory on the part of Claude.

I then put it up against Claude’s 3.5 Sonnet, which has been gradually improved over the months.

More from Tom’s Guide