When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

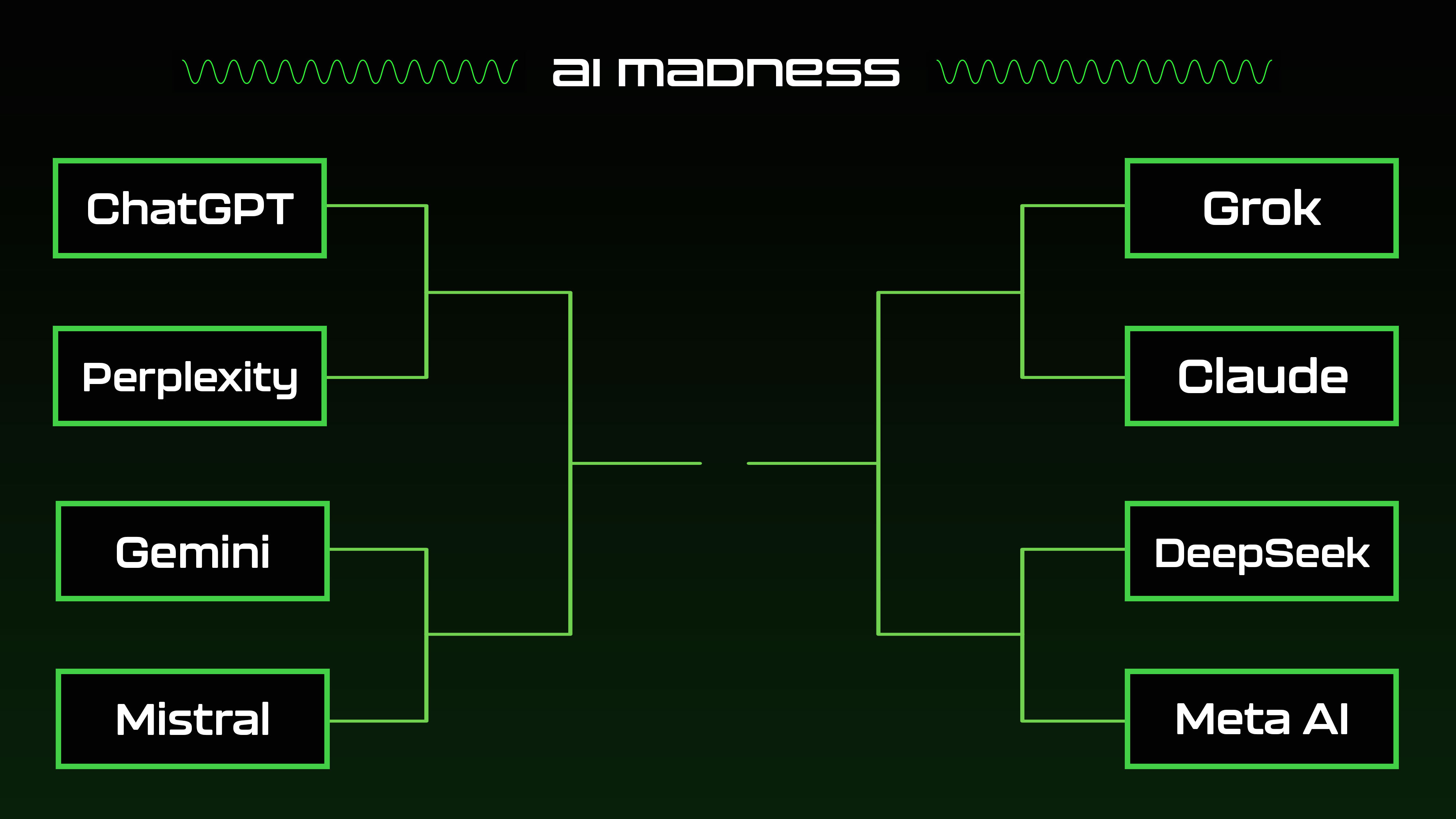

AI Madness threw eight leading AI chatbots into a single-elimination showdown.

From fact-checking to coding, storytelling to problem-solving, these bots got a full-stress test.

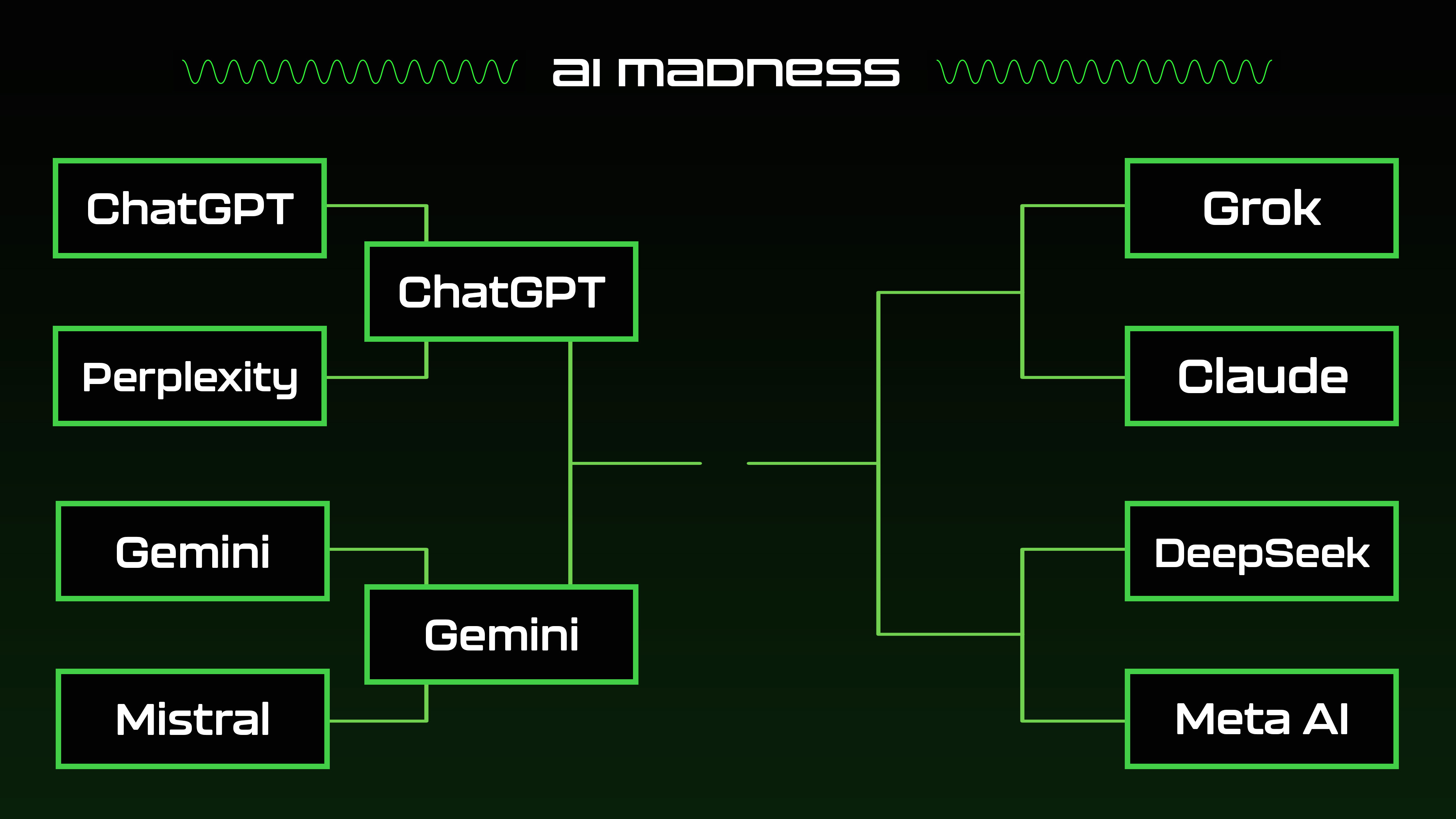

Round 1

The action kicked off withChatGPT vs. Perplexity.OpenAIs chatbot swept the competition by winning every round.

Gemini consistently provided more structured, engaging, and user-friendly answers across multiple categories.

Grok, meanwhile, caught us off guard byoutwitting Claude, Anthropics reflective, reasoning bot.

Grok provided the more accurate, comprehensive and engaging answers throughout every prompt.

Semifinals

Round two delivered two epic battles:ChatGPT vs. GeminiandGrok vs. DeepSeek.

Gemini outshone ChatGPT with tighter structure, clearer logic, and sharper reasoning.

AI Madness: The Final

The championship pitted together the unusual matchup ofGemini vs DeepSeek.

Its mix of clarity, creativity, and practicality left the competition in the dust.

This approach allows the model to refine its responses and enhance performance by learning from its own errors.

Final thoughts

In summary, DeepSeeks triumph proves theres value in looking beyond typical training methods.

DeepSeek-R1’s reliance on pure reinforcement learning marks a significant departure from traditional supervised fine-tuning methods.