When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

But are they really that useful, or is it all just wishful thinking?

I thought it might be worth looking at three of the main pretenders to see what they offer.

There are two major reasons for its explosive success.

First it can run on extremely modest hardware, especially in its smaller versions.

This ranges from basic chat search, e.g.

how can I remove stains from a cotton T-shirt, to handling tax queries or other personal issues.

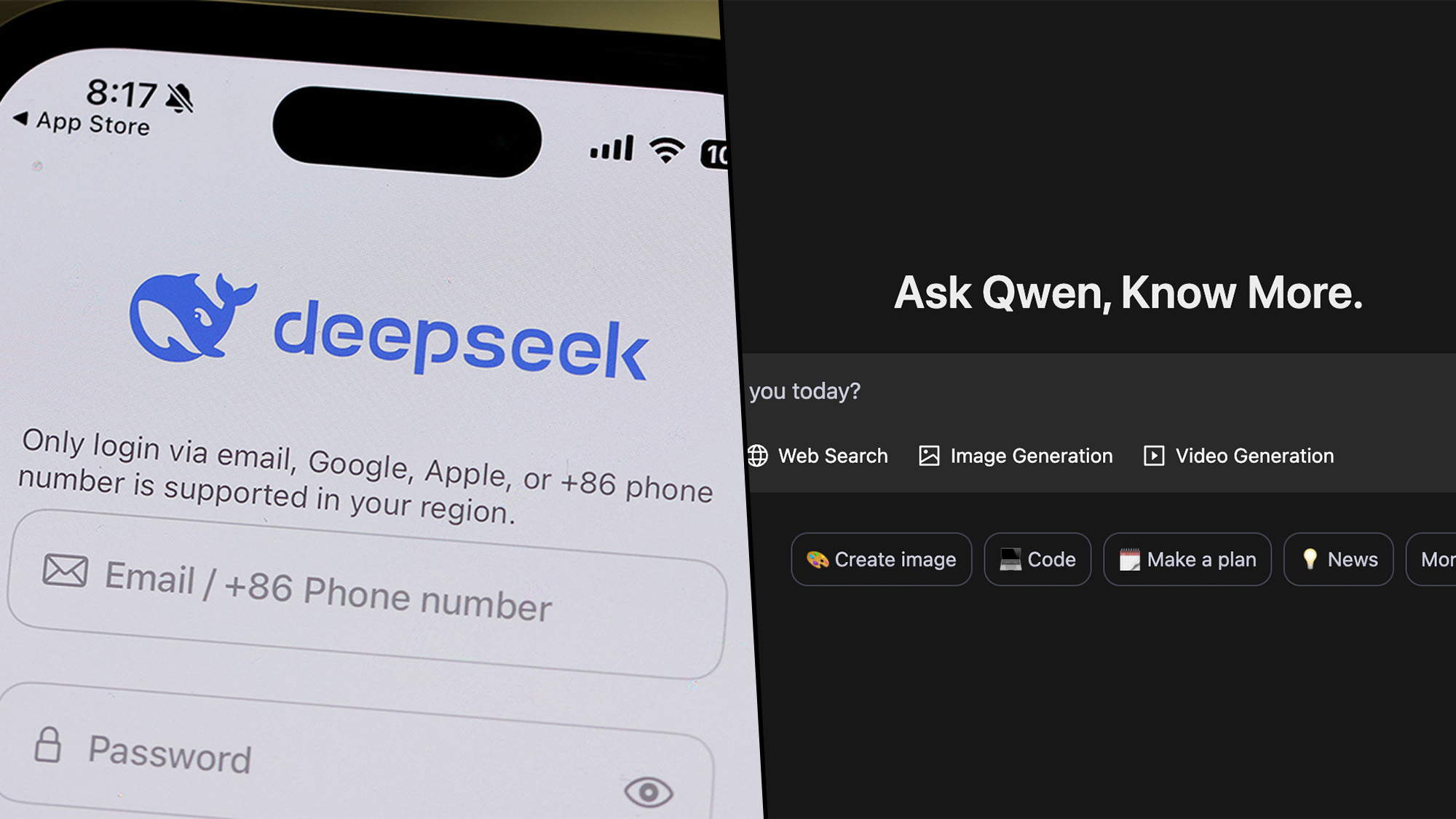

Qwen

Another good option is the Qwen range of models.

I currently have three versions ofQwen 2.5on my PC, specifically the 7B, 14B and 32B models.

Theres also a neat coding version, which offers free code generation for creating small simple apps and utilities.

The one area where it’s still especially strong, is vision.

So I runLlama 3.2-visionto scan documents and decipher images.

I also have a custom tuned version ofLlama 3which I love using for general knowledge.

It seems to consistently deliver more detailed and accurate responses per question.

Points to note

There are a few things to note about using local models.

The first is newer is almost always better.

This can restrict their usefulness for more complex tasks, but is also slowly changing as the tech matures.

A great place to start is by doing a search on the open source model catalog atHugging Face.

Most models can be installed and run fromOllamaor theLMStudioapp.