When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Luma Labs, the startup behind thepopular Dream Machine AI creativity platform, is releasing a new video model.

According to Luma, Ray2 can distinguish interactions between different objects and object types.

This video was generated using Luma Labs Ray2 model

This includes between humans, creatures and vehicles, adding to the realism.

It is a native multimodal architecture that was trained directly on video data to improve the accuracy of characters.

A ball of matter

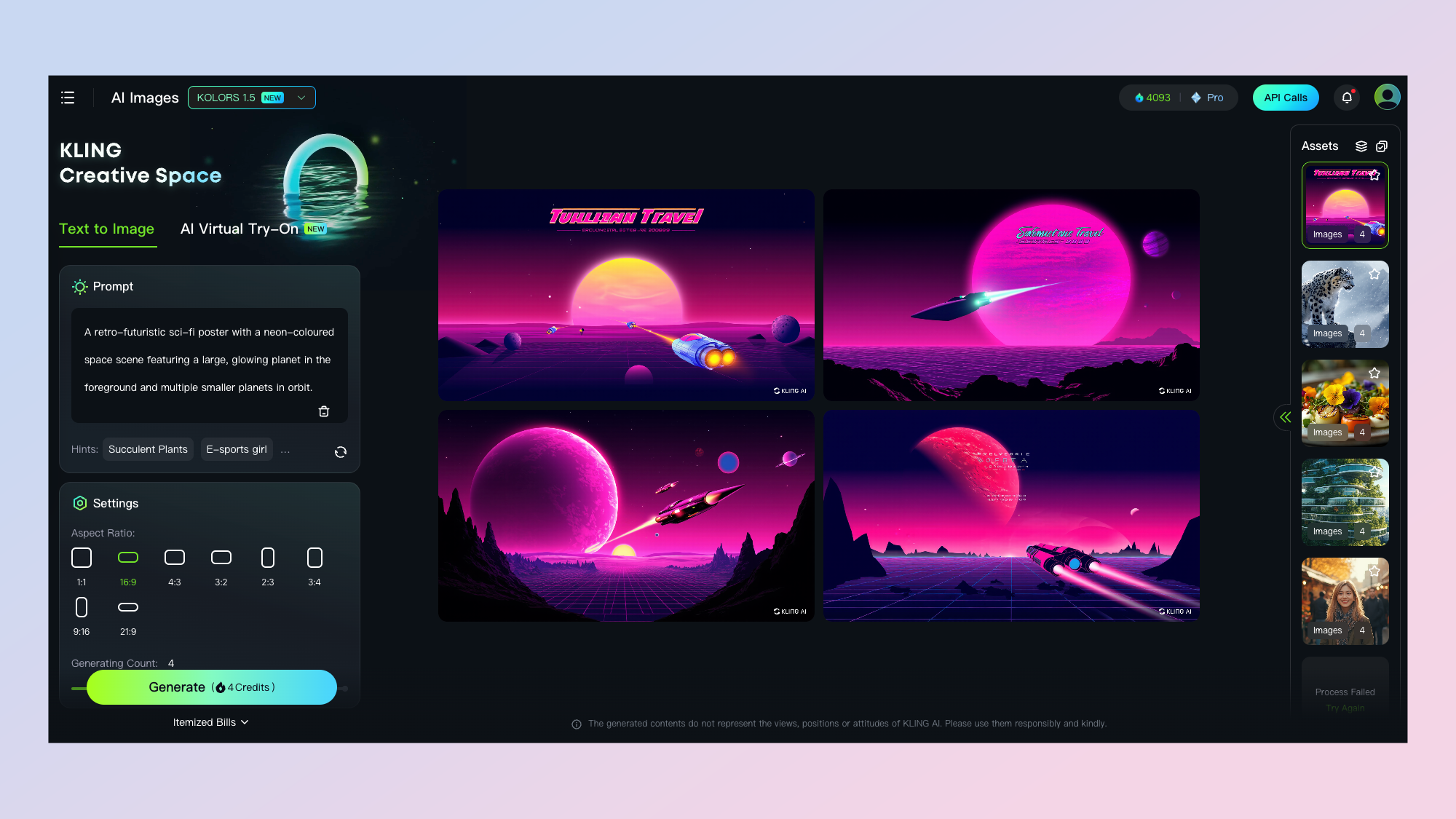

Scaling video model pretraining is leading to far more accurate physics!

This would be great for planet scenes.

He said: Scaling video model pretraining is leading to far more accurate physics!

He said he was enjoying the improvements they have made to motion.

Sweeping mountain vista

New frontier.

It shows how well the model handles camera motion over complex terrain.

Other models develop a slight game-like style in these scenes.

Shared by Lumas William Shen it depicts a cat tentatively moving across a couch before leaping.

Man on a tightrope

Look at the physics in the @LumaLabsAI Ray2 generation…Holodeck is coming.

In it a man tentatively crosses a ravine on a tight rope and shows some very accurate footwork.

Allen wrote: Look at the physics in the Ray2 generation… Holodeck is coming.

Final thoughts

AI video is advancing faster than almost any other form of artificial intelligence.

I havent tried Luma Ray2 yet, it hasnt been released.

More from Tom’s Guide